ATTiny85 First Sketch

First successful try with ATTiny85, programmed through an Arduino UNO. Followed instructions found here

First successful try with ATTiny85, programmed through an Arduino UNO. Followed instructions found here

This robot can run through two types of circuits, one that involves a Voltage Trigger and another that involves Diodes (Zener or Signal). In the end we decided to go with the Signal Diode circuit.

The electronic components in this BEAM Solar robot are: Voltaic 2W - 6V Solar panel, a 1F Capacitor, a 6V and 280 mA DC motor, PNP Transistor (2N3906), two Signal Diodes (in series), 2.2K Ω resistor and NPN Transistor (2N2904). How this circuit works is the Capacitor charges until the PNP transistor (06) receives base current through the Signal Diodes and turns on. The NPN transistor (04) turns on and the capacitor is discharged through the motor. As the NPN turns on, the 2.2K resistor starts to supply base current to the PNP and the circuit snaps on. When the capacitor voltage drops below about 1V, the the PNP turns off, the NPN turns off and disconnects the motor from the capacitor which starts to charge up again.

We changed to this motor once we fail trying out a High Efficiency motor (4V and 30mA). Despite this change, the overall torque from the 6V Motor (± 180 gm/s^2) and 1.4cm radius wheels still isn't enough to drive the entire rig (circuit, plastic disc and plastic sphere). Next steps could be getting a more powerful motor, or make the entire robot lighter.

What could be a way to log ITP's entrance and see the difference between the elevators' use and the stairs'? Through a solar powered DIY Arduino, we decided to visualize this data (and store it in a .csv table) in the screen between the elevators at ITP's entrance.

After creating a DIY Arduino that could be powered through solar energy, by following Kina's tutorial we were able to set a basic solar rig that would charge the 3.7V and 1200 mA LiPo battery. We connected the solar panels in series and ended up with an open circuit voltage of 13V. Our current readings however, were of 4 mA.

We hooked the Arduino data to a Processing sketch that would overwrite the table data of a .csv file every second. All of the code can be found in this link.

This is a performance made thanks to the Graduate Student Organization (GSO) Grant at NYU's Tisch School of the Arts. In collaboration with Daniela Tenhamm-Tejos, Jana L. Pickart and Ansh Pattel we explored body language and psycho-geography in urban spaces. I was the developer behind the Gesture Recognition code. For the official website please visit this link.

With this project I created a series of visual effects that responded to the performer's choreography and the poet's voice and audience interaction. These effects were created in the C++ toolkit known as OpenFrameworks. Here is a sneak-peak of these effects

How can a fabricated object have an interactive life? The M-Code Box is a manifestation of words translated into a tangible morse code percussion. You can find the code here and what's needed to create one M-Code Box is an Arduino UNO, a Solenoid Motor (external power source, simple circuit) and a laptop with Processing.

There are two paths to take this project further. One is to have an interpreter component, recording its sounds and re-encoding them into words, like conversation triggers. The second is to start thinking on musical compositions by multiplying and varying this box in materials and dimensions.

This project came upon assembling two previous projects, the Box Fab exploration of live hinges and the Morse Code Translator that translates typed text into physical pulses.

Inspired by Hieronymus Bosch's suculent imagery, I decided to make a lamp. This is a continuation from one of the happy accidents from the live-hinges box. An exploration to push further the notion of wood bending. The result was an interesting exercise in terms of light composition, but not entirely satisfactory in terms of plastic art terms. This is how the result looked

A key fact to consider for future creations involving various bended pieces that will ultimately assemble one shape, is to bend them all together instead of separately. Another insight around this exploration was the progressive ability to successfully bend 1/4 inch plywood. There were two live hinges patterns involved in this lamp shade. The lower pieces were created through a more flexible pattern, while the upper pieces hadn't a lot of flexibility. Both were bended with hot water but the latter involved a DIY circular press that helped create a memory in the wood fibers. Here's a lineal documentation of the entire fabrication process

These were the live-hinges involved in the lamp shade design, upper and lower correspondingly.

This project is an ongoing pursue around the question of how to overcome a creative block? Partnered with Lutfiadi Rahmanto, we started out scribbling, sketching and describing the problem to better understand what it meant for each of us and how do we scope this problem and usually respond to it.

From the first session we were able to narrow the idea onto a determined goal: A tool to aid inspiration in the creative process. This led us to consider various things around the sought scenario and allowed us to start asking other creatives around this. We sought to better understand –qualitatively– how creatives describe a creative block and more importantly how is creative block overcome? From this session we were also able to reflect on how to aid that starting point of ideating, often a hard endeavor. A resonating answer in the end, was through linking non-related words, concepts or ideas.

We also researched two articles with subject matter experts about Creative Block and Overcoming It ("How to Break Through Your Creative Block: Strategies from 90 of Today's Most Exciting Creators" and "Advice from Artists on Hot to Overcome Creative Block, Handle Criticism, and Nurture Your Sense of Self-Worth"). Here we found a collage between our initial hypothesis with additional components such as remix, from Jessica Hagy's wonderful analogical method of overcoming her creative block by randomly grabbing a book and opening it a random page and linking "the seed of a thousand stories". Another valuable insight was creating space of diverted focus from the task at hand generating the block. We also found a clear experience-design directive for our app, to balance between constrain –structured scrambled data from the API– and freedom –imaginative play–.

After validating our intuitive hypotheses on how to address the problem through the contextual inquiries and online articles we came up with a solid Design Brief:

Encourage a diverted focus where people are able to create ideas by scrambling data from the Cooper Hewitt's database into random ideas (phrases).

Through this research we created seven different behavior patterns and mapped them onto this two-axis map, that defines the extent to which personas would behave between casual/serious and unique/remix.

For a more detailed description of these archetype behaviors visit this link

This enabled us to create our guiding design path through what Lola Bates-Campbell describes as the MUSE. An outlier persona to direct and answer the usual nuances behind designing, in this case, our mobile application tool to aid Mae Cherson in her creative block. We determined her goals and thus her underlying motivations, what she usually does –activities– during her creative environment and how she goes around between small and greater creative blocks in her working space. We also describe her attitudes towards this blocking scenario and how her feelings entangle whenever seeking for inspiration. There were some other traits determined as well that can be reach in more detail through this link. Overall we crafted this Muse as a reference point for creating an inspirational experience for the selected archetypes –The Clumsy Reliever and The Medley Maker–.

Parallel to the archetypes mapping, we began thinking how to engage our audience –Artists, Designers, Writers, Thinkers, Makers, Tinkerers, all poiesis casters–. Soon we realize the opportunity of captivating our audience through a game-like interaction. A gameplay that requires simple gestures and encourages discoverability. Some of the games we took as reference are Candy Crush and 2 Dots. Two simple games that have out-stand for their heavily and widespread engagement.

By having research cues and possible game-like affordances in mind there's proliferous space to weave tentative design solutions. Hence we made a couple whiles to sketch layouts, concepts, poetic interactions and nonsense infractions.

On the other side we created sense and sought a balance between amusement and feasibility. At the end of this session we came up with three Design Layout Concepts and general Affordances (call to interaction): Linking, Discovering and Dragging.

From these concepts we started making interactive prototypes. While creating the Discovering prototype, we realize people's intuitive mental model beneath a Candy Crush-like interaction did not match with our design intent, and trying to match it resulted overly complicated and forced. This is why we created prototypes for the Linking and Dragging concepts.

Another prototype explores the underlying preference between text-driven inspiration and visually-driven inspiration. While testing these prototypes we realize some people tent to feel more inspired by imaging the words from a text, and other people feel more inspired by visual queues. This prototype allows both explorations.

The next step is to select one gameplay interaction from our user tests and sintactically address the text data from the API.

This is another interaction mode –Remixing Mode–, thought after Katherine's valuable feedback on our final prototype that can be accessed in this link.

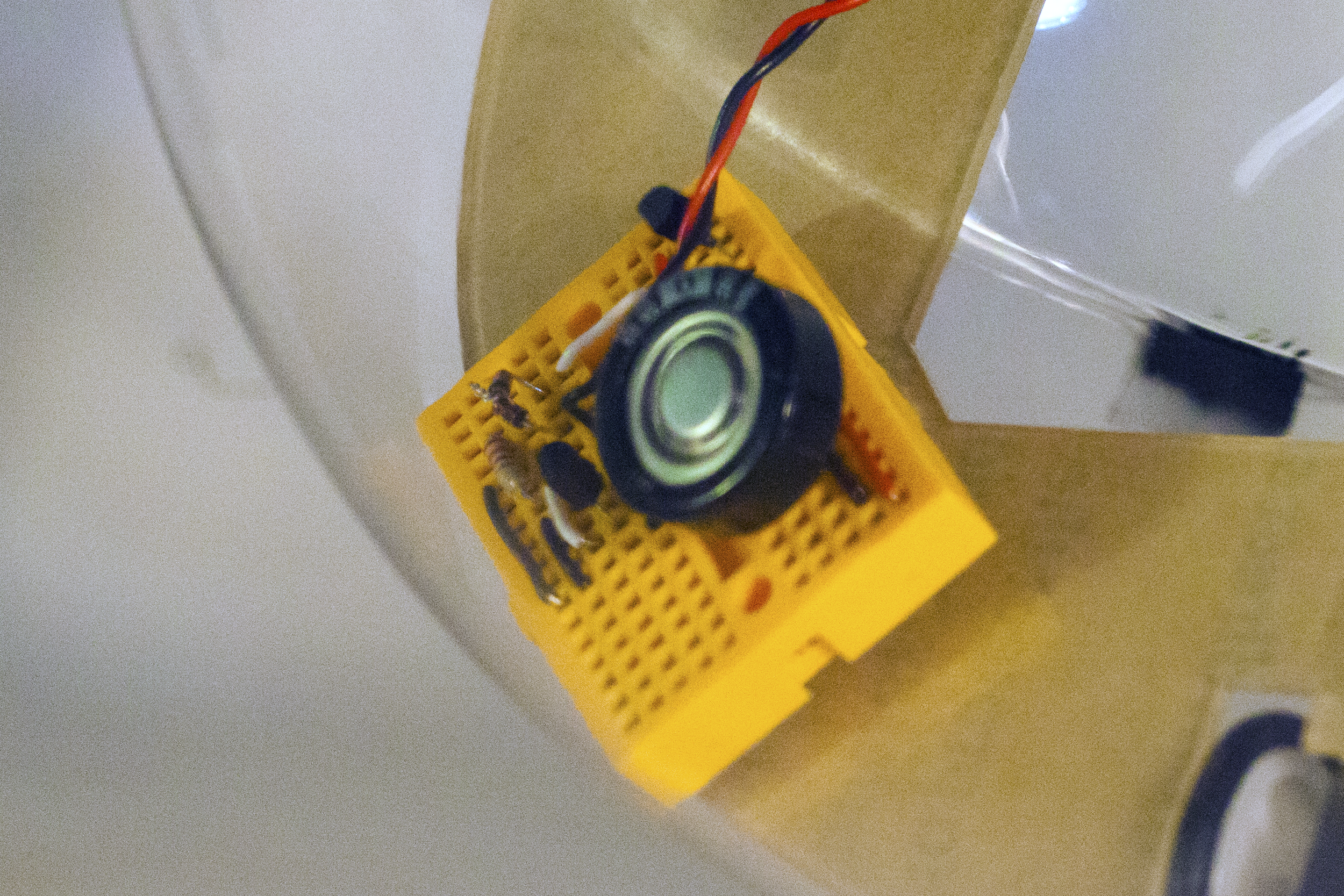

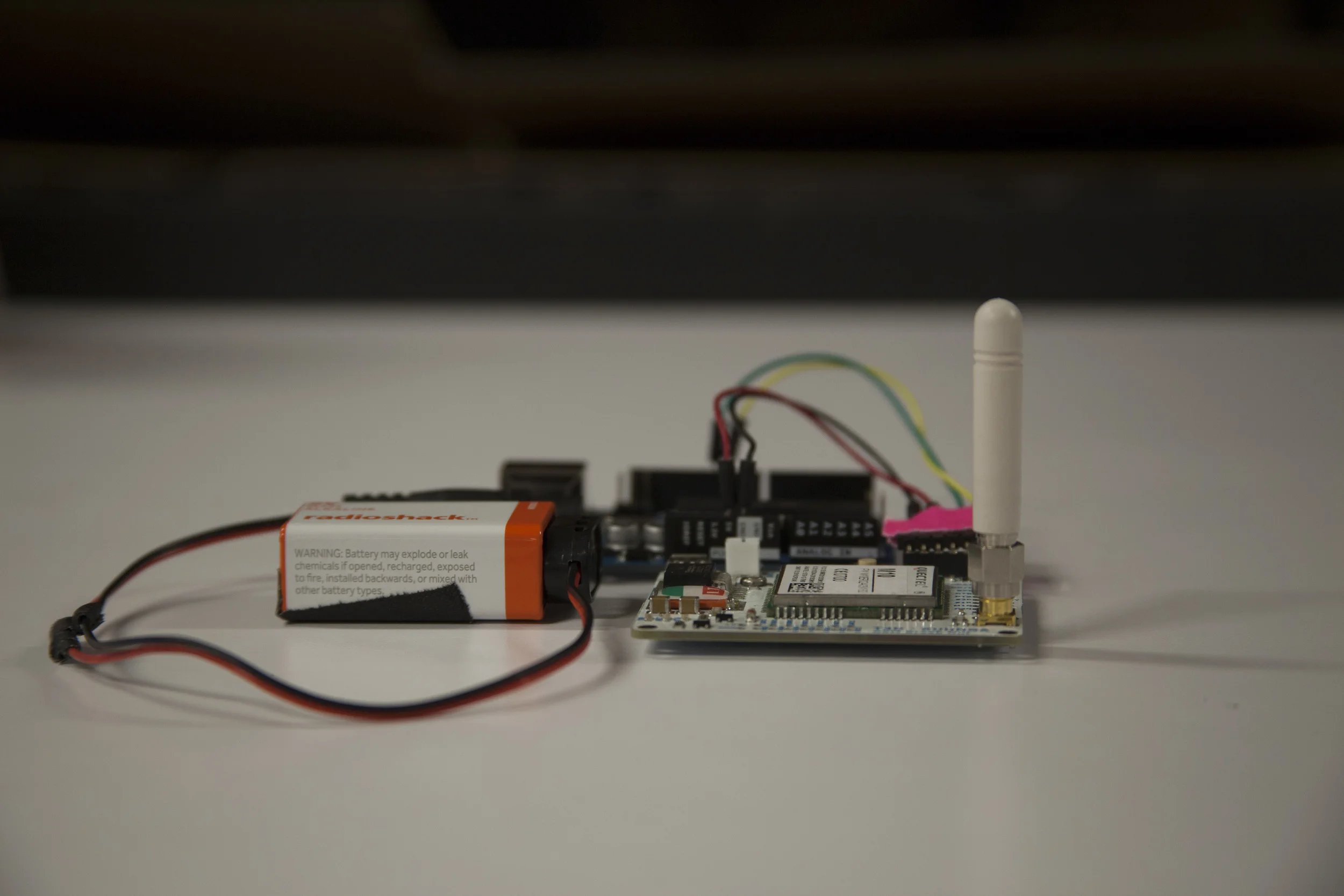

For our GSM class final, partnered with Clara and Karthik we created a Geo-Fence hooked to an Arduino Uno.

If you're receiving garble when reading the incoming data from the GSM through Arduino, you should check whether both boards are working at the same baudrate (9600). To change the GSM board –Quectel 10M– baudrate through an FTDI adaptor and CoolTerm:

Now plug the GSM Board to the chosen Software Serial PINS (10 and 11) and change the Fence center-point (homeLat and homeLon) the size –radius– of the fence in KMs (thresholdDistance) and the phone is going to be controlled from (phoneNumber), UPLOAD and run the SERIAL MONITOR. The code can be found in this Github Repo. Enjoy

The hardware used for this project was an Arduino UNO and one of Benedetta's custom GSM Boards with enabled GPS. This setup could work for potentially stand-alone purposes, however is advised to make sure beforehand that the power source has enough Watts to run the setup for the sought time.

Along Oryan Inbar, we decided to address the kinetic energy challenge by powering the LED through a trainer bicycle setup. After repurposing the stepper motor from a bill-printer we began exploring different circuit possibilities around capacitive, resistor and charging settings. In the end our circuit is composed by the two rectified-coils from the stepper connected in series, a two way switch that allows to charge the capacitors first and light the LED after, three 1F Capacitors, one 330 Ohm resistor and a counter LED (which we believe is lit by 1.7 Volts)

It was surprising to see the Short Circuit Voltage whenever plugging the LED, from around 29V to 2.2V. We also decided to add up the two coils to two Bridge Rectifiers that would power the circuit in series. This and the overall capacitance pointed that we needed to first charge the capacitors before connecting the LED. This is the reason behind the two way switch. After sorting the general circuitry, we decided to use the strongest muscles as the source of power along with an already solved solution as the mechanism –a bicycle–.

We created a bicycle trainer to interface the bicycle to the stepper motor. This latter one we re-use it from a bill-printer taken from the shop's junk shelf. The overall kinetic energy inputed into the stepper motor can be identified from the gear configuration. We re-use the embedded gear system from the printer and realized that the driver has a 11:1 ratio in relation to the driven motor gear. At the same time, this gear system, specifically its driver was connected to the back wheel from the bike, having more less a 1:35 ratio.

The general idea came from the hula-hoop toy as a cyclical activity. After some thought and happy accidents, it was clear that a scaled down version would be more suitable. As a rapid prototyping strategy, the use of glowing necklaces came in pretty handy. It not only scales up quite well to the assignment's brief, but it communicates clearly the data visualization of the activity's tentative feedback.

After some user tests, it was clear that people intuitively spin the ring in either way –clockwise and counterclockwise–, which means that the overall task of counting should be designed in line to this ambivalence.

This is the reason behind the two sides (white and black) of the object. When spinning the object with the white side up, it counts and whenever the black is up it basically undo the counts. The general idea is to see the circle light up in relation the the goal's progression, being counting up or down. The way to set up the mode and quantity are through embedded knobs as shown in the third illustration.

Having a prototyped experience makes the UX mobile sketching easier. Nevertheless, these diagrams make the overall panoramic view of the experience much clearer.

Translating this physical experience into a digitally mobile one could go two ways. In the end, the chosen alternative will rely on user tests. The initial idea is to spin the mobile device to count and spin it the other way to undo the count. However, spinning the physical device might not be intuitively enough in mobile applications. The other hypothetical alternative is through a circular swiping gesture, consistently upon the whole app's interaction.

The ideal experience behind an order in a plane –maybe elsewhere as well– would be to be suggested food pairing by correlating the person's Agenda, Rest Prediction through Biometric sensed data, and a Medical history. Before creating the wireframe, I deconstructed the information into a Hierarchical Task Analysis to have a better sense of the drill down flow of the overall UI

There's 3 sub-levels involved in the order flow, except for Coffee which takes two additional (type of milk and sweetness). By creating this, I was able to decide on micro interactions such as reducing the choices to Yes or No answers whenever a refined choice is needed. For instance, Water with or without ice. This allows for an overall consistent UI flow.

Choose from the different food typologies

Choose a determined product from a particular typology

Refine your choices by answering simple Yes/No questions whenever necessary

Make sure everything selected is correct

Simple gesture to order

The overall circle layout is the a tentative proposal towards cyclic rituals behind meals.

We decided to work with live-hinges for our first project. We started off by concept proving through black foam.

After some tests, we chose the "parametric kerf #6" pattern given to its generous flexibility. For our overall box concept we combined the live-hinge method with a for dice semi-cubed volume. The next step we took, was to start cutting the two apparently replicated pieces.

However, our estimates for covering the half circles was inaccurate, avoiding the planes to fully assemble one-another.

For our second iteration, we follow Eric's advice and jump to prototype with our final material, wood. This we planned and did a little calculations to make sure the sides height would match to the half circle perimeters. We also planned for 45º edges, so we created 5mm inner reference raster-edges to sand after cutting. Since the material is 5mm thick, we realize that for 45º edges we needed a "square" reference to more less know our limit when sanding off the residue.

On our second laser cutting attempt, we came around with some technical unexpected obstacles. Besides overestimating the setup a bit high, the machine also cut offset (unknown reason still). Last but not least, the 60W laser cutter settings are different from the 50W when it comes to edging/rastering with black. This third setback was in fact a happy accident that allow us to realize we could simplify the entire process by scaling one of the sides by the thickness of the material. Our third cut run quite smoothly.

We even explore ways of conveniently bending wood with warm water and overnight drying. The result wasn't perfect, but we now know how to make a perfect matching wood bending from what we learnt with this first experiment. In the end, our thought magnetized-closing lid wasn't necessary. This is our final prototype, along with our inspirational dice.

A simple snippet to make an LED light up when receiving a SMS, with one of Bennedetta's GSM Shields

Lighting the LED pin 13 in the Arduino board. After several failed attempts of writing from Arduino Serial Monitor, we decided to do it through Coolterm.

This is the code:

#include <SoftwareSerial.h>

SoftwareSerial mySerial(2, 3);

char inChar = 0;

char message[] = "que pasa HUMBA!";

void setup()

{

Serial.begin(9600);

Serial.println("Hello Debug Terminal!");

// set the data rate for the SoftwareSerial port

mySerial.begin(9600);

pinMode(13, OUTPUT);

//

// //Turn off echo from GSM

// mySerial.print("ATE0");

// mySerial.print("\r");

// delay(300);

//

// //Set the module to text mode

// mySerial.print("AT+CMGF=1");

// mySerial.print("\r");

// delay(500);

//

// //Send the following SMS to the following phone number

// mySerial.write("AT+CMGS=\"");

// // CHANGE THIS NUMBER! CHANGE THIS NUMBER! CHANGE THIS NUMBER!

// // 129 for domestic #s, 145 if with + in front of #

// mySerial.write("6313180614\",129");

// mySerial.write("\r");

// delay(300);

// // TYPE THE BODY OF THE TEXT HERE! 160 CHAR MAX!

// mySerial.write(message);

// // Special character to tell the module to send the message

// mySerial.write(0x1A);

// delay(500);

}

void loop() // run over and over

{

if (mySerial.available()){

inChar = mySerial.read();

Serial.write(inChar);

digitalWrite(13, HIGH);

delay(20);

digitalWrite(13, LOW);

}

if (Serial.available()>0){

mySerial.write(Serial.read());

}

}

We chose two mobile applications that ideally will help patients collect meaningful information about their symptoms and share them with their doctors in way that they can emit better recommendations. Thus, we looked at three overall assets in the applications: first that the use of these apps don't generate an additional frustration over their health, second that what they are registering can be is easily inputed and third that what's being registered could be useful for the doctor. After some research in the abundant alternatives of applications, we chose RheumaTrack and Pain Coach, even though we discarded Track React and Catch My Pain. Overall, we sought the best ones to ultimately decide which of the two was better. Its fair to say that both have useful and usable affordances, but RheumaTrack does add aggregate value that Pain Coach doesn't.

Overall we realized RheumaTrack is a better application because of one particular service or function, which is the way people input their joint pain. This interface in a nutshell is a meaningful (useful & usable) way for both patient and doctor of visualize and recording the pain condition in a really predictable manner. The overall process of adding a new entry (pain, medication and activity), though a bit clamped is clearer than others and pretty straightforward. This dashboard follows the conventional standards in regards of Mobile GUI design, where items and affordances are perceivable (easily readable) and predictable, and the overall navigation feedback. I could realize two simple UX elements that this could improve, which is whenever adding a "New Check" there's no progress bar to predict how long is this task going to take. The "Activity" interface could visually improve in various points . First, generating better contrast between the data recorded and the layers of pain intensity to enhance perceivability (readability) and the tags' date-format can be confusing. Nevertheless, the overall purpose of the "Activity" service or function is very useful for doctors.

This backpack caught my sight immediately and I’ve carried it since –eight years ago–. An outer clean minimalist silhouette tainted with coal and dark black communicated elegant simplicity. The continuity from the lateral-surrounding body-fabric onto the handles reinforced this minimalist perception and added structure and endurance. Its outer simplicity up to date disguises its inner complexity of vast services, to the extent of pockets often passing unnoticed. Various adventurous stories with its rogue laptop compartment have crafted a valueless feeling in my mind. I’m still discovering alternate uses for the side and handle straps such as water bottle holder, umbrella drainer or pen/marker holders. And besides its impeccable impermeability this awesome backpack is awfully comfortable.

This other object keeps tormenting our daily experiences, even though there have been solutions crafted by now. In a nut shell, this control frustrates people by cognitively loading us with excessive affordances (buttons). It's fair to clarify that the tasks all these affordances tackle may address interesting user needs. However, the frequency at which these needs may arise don't make up for this cognitive load. For example, as a beginner user, I don't know what are the A,B,C and D buttons for. Even though they may not be significantly big in comparison to other buttons, the fact that they have color distracts the overall reading from the control layout. A good solution already in market is Apple TV's control. It's consistent with its laptop controls created back in the mid 00s, allowing people to learn it easily and fast.

I choose a Gyroscope top. It resembles a slick whipping top, which embodies the dynamic equilibrium concept quite curiously.

These objects run thanks to the Centrifuge and Centripetal forces, which result by the momentum of the center load. Since this exercise is centered in laser cutting, I've deliberately ignore the center load that drives momentum, since the tentative materials to solve this cannot be laser cut by the machines in the shop. It would be really cool to have some sort of stone-like material for this exercise though.

The overall shapes could be any type of wood, the smallest circles though –Ds diameter– should be a hard wood to ensure a smoother spin for the center load.

Considering the 15 minute time span we had for this exercise, the approach I had for it was a communication one. In other words, I did not care much about details, but of the overall understanding of how I planned to translate the process of fragmenting the object onto the sliced fabrication method.

Time's running out! Will your Concentration drive the Needle fast enough? Through the EEG consumer electronic Mindwave, visualize how your concentration level drives the speed of the Needle's arm and pops the balloon, maybe!

Second UI Exploration

I designed, coded and fabricated the entire experience as an excuse to explore how people approach interfaces for the first time and imagine how things could or should be used.

The current UI focuses on the experience's challenge: 5 seconds to pop the balloon. The previous UI focused more on visually communicating the concentration signal (from now on called ATTENTION SIGNAL)

This is why there's prominence on the timer's dimension, location and color. The timer is bigger than the Attention signal and The Needle's digital representation. In addition this is why the timer is positioned at the left so people will read it first. Even though Attention signal is visually represented the concurrent question that emerged in NYC Media Lab's "The Future of Interfaces" and ITP's "Winter Show" was: what should I think of?

What drives the needle is the intensity of the concentration or overall electrical brain activity, which can be achieved through different ways, such as solving basic math problems for example –a recurrent successful on-site exercise–. More importantly, this question might be pointing to an underlying lack of feedback from the physical devise itself, a more revealing question would be: How could feedback in BCIs be better? Another reflection upon this interactive experience was, what would happen if this playful challenge was addressed differently by moving The Needle only when exceeding a certain Attention threshold?

By the end of 2014, crime rate -deaths- in Bogota, Colombia decreased. Mugs however remained, and to tackle the common smartphones theft, in Pinedot Studios we attempted to solve it. We created this concept app and pitched it to INTEL Colombia.