Kinetic Energy Challenge

Concept Development

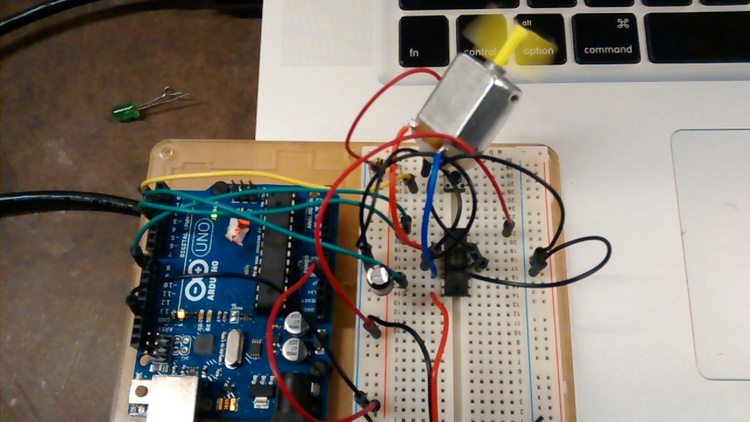

Along Oryan Inbar, we decided to address the kinetic energy challenge by powering the LED through a trainer bicycle setup. After repurposing the stepper motor from a bill-printer we began exploring different circuit possibilities around capacitive, resistor and charging settings. In the end our circuit is composed by the two rectified-coils from the stepper connected in series, a two way switch that allows to charge the capacitors first and light the LED after, three 1F Capacitors, one 330 Ohm resistor and a counter LED (which we believe is lit by 1.7 Volts)

Insights

It was surprising to see the Short Circuit Voltage whenever plugging the LED, from around 29V to 2.2V. We also decided to add up the two coils to two Bridge Rectifiers that would power the circuit in series. This and the overall capacitance pointed that we needed to first charge the capacitors before connecting the LED. This is the reason behind the two way switch. After sorting the general circuitry, we decided to use the strongest muscles as the source of power along with an already solved solution as the mechanism –a bicycle–.

Conclusions

We created a bicycle trainer to interface the bicycle to the stepper motor. This latter one we re-use it from a bill-printer taken from the shop's junk shelf. The overall kinetic energy inputed into the stepper motor can be identified from the gear configuration. We re-use the embedded gear system from the printer and realized that the driver has a 11:1 ratio in relation to the driven motor gear. At the same time, this gear system, specifically its driver was connected to the back wheel from the bike, having more less a 1:35 ratio.